Introduction

All the Atbash repositories are still under heavy development, that is why they are released in one go. The last few days, such a release of almost all libraries is performed.

This gives a short overview of what you can find.

Big features

The big feature changes can be found in

- Atbash JWT support related to cryptographic key support.

- Atbash Rest client, a Java 7 port of the MicroProfile spec.

- And Atbash Octopus where KeyCloak and MicroProfile JWT auth spec and interoperability between schemes are central in this release.

Cryptographic key support

Since there are many formats in which keys can be persisted (PEM, Java Key Stores, JWK, etc …), they are all internally stored as an AtbashKey. It contains the Key itself (as Java object), the identification and the type of the key (like RSA, private or public part, etc …)

Creating such keys can be achieved by using the class KeyGenerator, with the method generateKeys(). This class is available as CDI instance or can be instantiated directory in those environments/locations where no CDI is available.

The parameter of the generateKeys() method, defines which key(s) is created. This parameter can be created using a builder pattern.

RSAGenerationParameters generationParameters = new RSAGenerationParameters.RSAGenerationParametersBuilder()

.withKeyId("the-kid")

.build();

List<AtbashKey> atbashKeys = generator.generateKeys(generationParameters);

In the above example, multiple keys are generated since RSA is an asymmetric key and thus private and public parts are generated.

Writing of a key can be performed with the KeyWriter class. It has a method, writeKeyResource, which can be used to persist a key into one of the formats. The format is specified as a parameter of type KeyResourceType. This can indicate the required format like PEM, Key store, JWK, etc…

The specific type of PEM (like PKCS1, PKCS8, etc …) is defined by the configuration parameters.

Another parameter defines the password/passphrase for the key (if needed) and one for the file as a whole in the case of the Java KeyStore format for example.

The last functionality around key is then reading of all those keys in the supported format. This functionality is implemented in the KeyReader class. It is again a CDI bean which can be instantiated when no CDI environment is available.

It contains a readKeyResource() method which can read all the keys in a resource (like PEM file, Java Key Store, JWK, etc …) As a parameter, an instance of KeyResourcePasswordLookup is supplied which retrieves a password in those case where it is needed (to read the file or decrypt the key)

The return of the method is a list because a resource can contain more than one key AtbashKeys.

This Key support is an initial version and will be improved in the further releases of the atbash-jwt-support releases with more features and more supported formats.

Atbash Rest Client

A first release was done mid-June and contained an implementation in Java 7 for Java SE and Java EE which is compatible with the MicroProfile Rest Client specification. (see here) It allows you to ‘inject’ or create (useful in Java SE environments) a system generated Rest client based on the definition of your JAX-RS endpoint defined in an interface class.

In this release, the RestClientBuilderListener from the MP Rest Client spec 1.1 is added and implemented so that we can define some additional providers in a general way. This is important for the Octopus release so that we can add the credentials, stored within the Octopus context, to the JAX-RS call automatically. Without the need to specify the providers manually.

Atbash Octopus

And of course, many new features are added to Octopus. They are migrated from the old Octopus or newly added.

The highlights are:

– Added support for KeyCloak server. JSF applications can use the authentication and authorization from KeyCloak configured realms. Also, the AccessToken from it can be based on in the header of other request and verified by JAX-RS endpoints. The only thing which is needed is the location of the KeyCloak server and the realm config in JSON (which is supplied by KeyCloak)

– The SPI option to pass the expected password for a user can now handle hashed passwords. Both the ‘standard’ algorithms from MessageDigest, like SHA-256 but also the key derivation function PBKDF2 can be defined easily.

– The authorization annotations, like @RequiresPermissions, can be specified on JAX-RS methods without the need to define those resources as CDI or EJB beans.

– Authentication and authorization information can be converted automatically to an MP JWT Auth compliant format and used in calls to JAX-RS endpoints. This makes it possible for example integrate JAX-RS resources protected by KeyCloak and MP JWT seamless.

And too much other features to describe here in detail. The user manual is also started and will be announced soon.

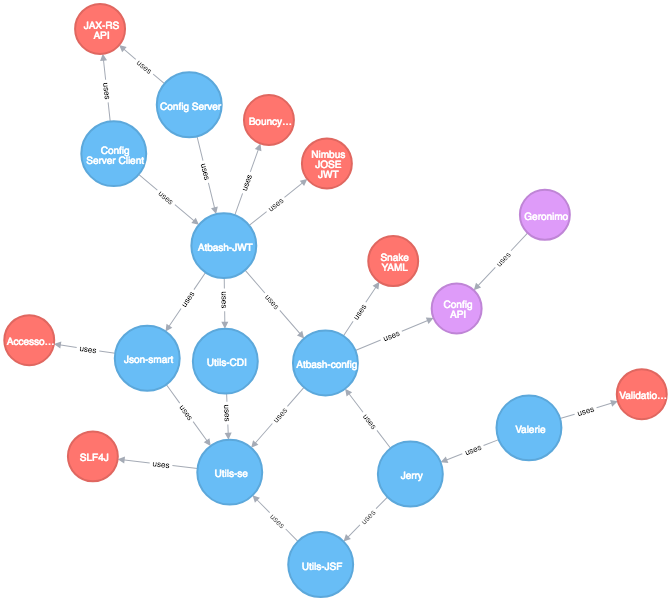

Overview all released frameworks

Utilities : 0.9.2

Set of utilities for Java SE, CDI and plain JSF which are very useful in many projects running in one of these environments.

- Added utility class for HEX encoding (next to the BASE64 encoding)

- Added support for byte arrays and encoding (HEX and BASE64) through the ByteSource class.

JSON-smart : 0.9.1

A small library (for Java 7) which can convert JSON to Java instances and vice versa.

- Added support for @JsonProperty to define the name of JSON property.

- Contains an SPI so that other naming annotations (like Jackson one) can be used.

Abash-config : 0.9.2

Extension for the MicroProfile Config implementations. Also a Java 7 port of Apache Geronimo Config.

- Configuration for the base name (with serviceLoader class) is optional.

- Port of MicroProfile Config 1.3 features to Java 7.

JWT Support : 0.9.0

Convert Java instances to JWT and vice versa and extensive support for Cryptographic keys (reading, writing, creating) supporting multiple types (like RSA, EC, and HMAC keys) and formats (like JWK, JWKSet, PEM, and KeyStore)

- Support for reading and writing multiple formats (PEM, KeyStore, JWK and JWKSet).

- Better support for JWT verification with keys using the concepts of KeySelector and KeyManager.

Atbash config server : 0.9.1

Configuration source for MP Config as a server supplying config through JAX-RS endpoints.

- Added Payara micro as supported server to serve the configuration.

Atbash Rest Client : 0.5.1

Rest client implementation for Java 7.

- Included RestClientBuilderListener from MP Rest Client 1.1 (to be able to define providers globally)

Octopus : 0.4

- Integration with Keycloak (Client Credentials for Java SE, AuthorizationCode grant for Web, AccessToken for JAX-RS)

- Supported for Hashed Passwords (MessageDigest ones and PBKDF2)

- Support for MP rest Client and Providers available to add tokens for MP JWT Auth and Keycloak.

- Logout functionality for Web.

- Authentication events.

- More features for JAX-RS integration (authorization violations on JAX-RS resource [no need for CDI or EJB], correct 401 return messages, … )

- Support for default user filter (no need to define user filter before authorizationFilter)

Conclusion

The release contains a lot of goodies related to secure. In the comings months, new features will be added, support for Java 8 and 11 are planned and user manuals and cookbooks will be available to get you started with all those goodies.

The Atbash repositories with some more info and the code of course, can be found at GitHub.

Have fun.