Introduction

With Java EE, you can create enterprise-type application quickly as you can concentrate on implementing the business logic.

You are also able to create applications which are more micro-service oriented, but some handy ‘features’ are not yet standardised. Standardisation is a process of specifying the best practices which of course takes some time to discover and validate these best practices.

The MicroProfile group wants to create standards for those micro-services concepts which are not yet available in Java EE. Their motto is

Optimising Enterprise Java for a micro-services architecture

This ensures that each application server, following these specifications, is compatible, just like Java EE itself. And it prepares the introduction of these specifications into Java EE, now Jakarta EE under the governance of the Eclipse Foundation.

Specification

There are already quite some specifications available under the MicroProfile flag. Have a look at the MicroProfile site and learn more about them over there.

The topics range from Configuration, Security (JWT tokens), Operations (Metric, Health, Tracing), resilience (Fault tolerance), documentation (OpenApi docu), etc …

Implementations

Just as with Java EE, there are different implementations available for each spec. The difference is that there is no Reference Implementation (RI), the special implementation which goes together with the specification documents.

All implementations are equally.

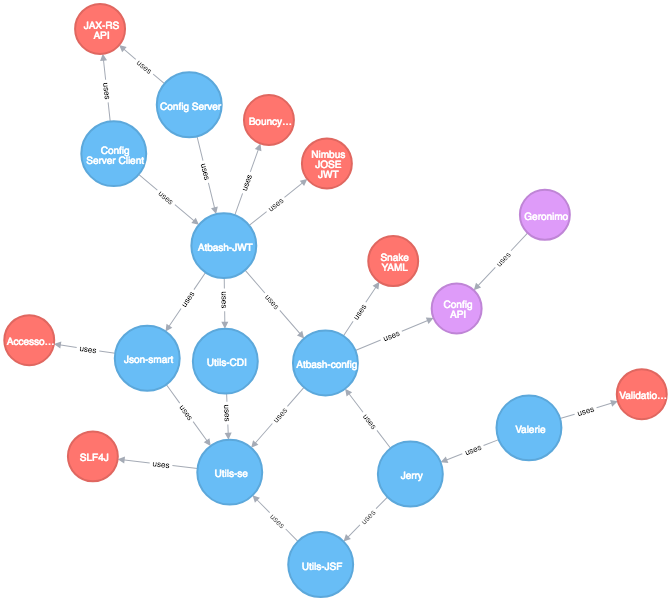

You can find standalone implementations for all specs within the SmallRye umbrella project or at Apache (mostly defined under the Apache Geronimo umbrella)

There exists also specific ‘server’ implementations which are specifically written for MicroProfile. Mostly based on Jetty or Netty, all implementations are added to have a compatible server.

Examples are KumuluzEE, Hammock, Launcher, Thorntail (v4 version) and Helidon for example.

But implementations are also made available within Java EE servers which brings both worlds tightly integrated. Examples are Payara and OpenLiberty but more servers are following this path like WildfFly and TomEE.

Using MicroProfile in Stock Java EE Servers

When you have your large legacy application which still needs to be maintained, you can also add the MicroProfile implementations to the server and benefits from their features.

It can be the first step in taking out parts of your large monolith and place it in a separate micro-service. When your package structure is already defined quite well, the separation can be done relatively easily and without the need to rewrite your application.

Although adding individual MicroProfile applications to the server is not always successful due to the usage of the advanced CDI features in MicroProfile implementations. To try things out, take one of the standalone implementations from SmallRye or Apache (Geronimo) – Config is the probably easiest to test, add it to the lib folder of your application server.

Dedicated Java EE Servers

There is also the much easier way to try out the combination which is choosing a certified Java server which has already all the MicroProfile implementations on board. Examples today are Payara and OpenLiberty. But also other vendors are going this way as the integration has started for WildLy and TomEE.

Since the integration part is already done, you can just start using them. Just add the MicroProfile Maven bom to your pom file and you are ready to go.

<dependency> <groupId>org.eclipse.microprofile</groupId> <artifactId>microprofile</artifactId> <version>2.0.1</version> <type>pom</type> <scope>provided</scope> </dependency>

This way, you can define how much Java EE or MicroProfile stuff you want to use within your application and can achieve the gradual migration from existing Java EE legacy applications to a more micro-service alike version.

In addition, there exists also maven plugins to convert your application to an uber executable jar or you can run your WAR file also using the hollow jar technique with Payara Micro for example.

Conclusion

With the inclusion of the MicroProfile implementations into servers like Payara and OpenLiberty, you can enjoy the features of that framework in your Java EE Application server which you are already familiar with.

It allows you to make use of these features when you need them and create even more micro-service alike applications and make a start of the decomposition of your legacy application into smaller parts if you feel the need for this.

Enjoy it.